This is the final part of a two part series, where I demonstrate the most commonly used methods to concatenate column values into a delimited string, http://jahaines.blogspot.com/2009/06/concatenating-column-values-part-1.html. This time around I will be focusing on the performance of each method described in part 1. The primary methods we are looking at are FOR XML PATH –SubQuery, FOR XML PATH-CrossApply, and a scalar UDF.

I will be using Profiler to capture performance metrics, as SQL Server IO stats are somewhat unreliable, in regards to UDFs. The IO statistics are unreliable for scalar UDFs because the IO does not account for the IO required to obtain the function result. It only returns the IO resulting from the main query. I will post an example of this in a future post. Let’s start by creating a new profiler trace. Open profiler and add the SQL:BatchCompleted counter. You will only need the columns textdata, Reads, and CPU. Add a database filter for ’%tempdb%’ and a filter on the textdata column. The textdata filter should be like ‘%—**%’. --** is a special string we put in our batch. This way we can ensure that we only get the statements we want. Now open management studio and make sure to discard the grid results. You can set this in query options. Query –> Query Options –> Grid –> Discard results after execution. Now let’s run our create/test script to generate our table and objects, as shown below. The main things to note in the below code is the @Batch variable. This variable dictates how many rows your table will contain. I ran the code below for 5 different table sizes (100,1000,10000, 100000, and 1000000) 10 times each. This gives us a pretty solid average, for our tests. Your results may differ from mine, as there are lots of factors that can influence the result of performance counters, but you should still be in the same ballpark. In the end I created 10 trace files, each having 10 executions of the code below. The break down was 5 trace file where a valid predicate was not used and 5 trace files where the predicate was used. Note: The predicate I am referring too is commented out in the below code. Just uncomment it to run the predicate version of the code. If you want all the test files I used, you can download them here: http://cid-6f041c9a994564d8.skydrive.live.com/self.aspx/.Public/Concatenating%20Columns%20Part%202/ConcatenateCols%7C_Pt2.zip

SET NOCOUNT ON;

GO

USE [tempdb]

GO

IF OBJECT_ID('dbo.TestData') IS NOT NULL

BEGIN

DROP TABLE dbo.[TestData];

END

GO

DECLARE @Batch INT

SET @Batch = 1000000 –<-----Number of records in table

SELECT TOP (@Batch)

SomeID = ABS(CHECKSUM(NEWID()))%((@Batch/100)*25)+1,

SomeCode = CHAR(ABS(CHECKSUM(NEWID()))%26+65)

+ CHAR(ABS(CHECKSUM(NEWID()))%26+65)

INTO dbo.TestData

FROM Master.dbo.SysColumns t1,

Master.dbo.SysColumns t2

GO

CREATE CLUSTERED INDEX cl_idx_SomeID_TestData ON [dbo].[TestData](SomeId)

GO

CREATE INDEX IXC_TestData_Cover1 ON dbo.TestData(SomeCode)

GO

USE [tempdb]

GO

IF EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[ConcatenateCols]') AND type in (N'FN', N'IF', N'TF', N'FS', N'FT'))

DROP FUNCTION [dbo].[ConcatenateCols]

GO

CREATE FUNCTION dbo.ConcatenateCols(@Id INT)

RETURNS VARCHAR(MAX)

AS

BEGIN

DECLARE @RtnStr VARCHAR(MAX)

SELECT @RtnStr = COALESCE(@RtnStr + ',','') + SomeCode

FROM dbo.TestData

WHERE SomeId = @Id

RETURN @RtnStr

END

GO

DBCC DROPCLEANBUFFERS;

DBCC FREEPROCCACHE;

GO

--**

SELECT

SomeId,

dbo.ConcatenateCols(SomeId) AS Cols

FROM [dbo].[TestData]

--WHERE

-- [SomeId] = 18

GROUP BY

SomeId

GO

DBCC DROPCLEANBUFFERS;

DBCC FREEPROCCACHE;

GO

--**

SELECT

SomeId,

STUFF(

(

SELECT ',' + CONVERT(VARCHAR(5),t2.[SomeCode])

FROM dbo.[TestData] t2

WHERE

t1.[SomeId] = t2.[SomeId]

FOR XML PATH('')

)

,1,1,'') AS Cols

FROM dbo.[TestData] t1

--WHERE

-- [SomeId] = 18

GROUP BY

SomeId

GO

DBCC DROPCLEANBUFFERS;

DBCC FREEPROCCACHE;

GO

--**

SELECT

t1.SomeId,

MAX(STUFF(t2.x_id,1,1,'')) AS Cols

FROM dbo.[TestData] t1

CROSS apply(

SELECT ',' + t2.SomeCode

FROM dbo.[TestData] t2

WHERE t2.SomeId = t1.SomeId

FOR xml PATH('')

) AS t2 (x_id)

--WHERE

-- [SomeId] = 18

GROUP BY

t1.SomeId

GO

I am not going to show the methods used to load a trace file into a table because that is outside the scope of this post. You can download all the .sql files for this post using the link at the top or bottom of this page. Essentially, I used the system function to pull in the trace file and I inserted the data into a table in tempdb. Once the data is in tempdb, I used the pivot function to pivot the data into a format Excel could use to create a chart. From there I just had to copy the results and paste them in Excel (to generate the chart). Okay, now that I have laid all the ground work, let’s get to the numbers. I will start with the reads for the query that is using a predicate.

As you can see, the number of reads is very much alike for all three methods. The numbers of reads stays pretty constant for each query across subsequent runs, with differing distributions of data. This result makes sense because the number of reads does not need to change because the optimizer is able to seek the data, which excludes the need to look through additional pages. The best and worst performing methods in this scenario is quite obvious to see. The sub query method consistently had lesser reads, while the other methods require more reads. The cross apply method is by far the worst choice here. Next let’s look at the the reads for the query without a predicate.

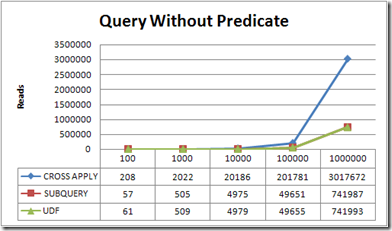

These results are are closer to what one would expect. Essentially, as the number of rows increases, so does the number of reads. In this case both the reads and the table size increase by a factor of 10. The UDF and the sub query methods are neck-and-neck for this test, but if I had to choose I would say the sub query method wins. The cross apply method is by far the worst choice again. Now let’s talk about the CPU usage for each query.

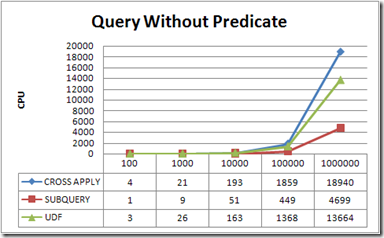

No, real big surprises here. The CPU time increase exponentially based on the number of rows being processed. The results are pretty consistent with our previous results. The sub query is by far the best performing method and the cross apply is the worst.

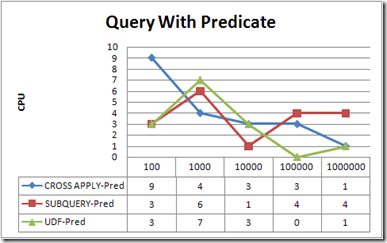

The CPU times for the query with predicate deviates so little that it is pretty inconsequentially, but notable. I would say the UDF method is the best because it has a lesser cumulative CPU time.

So that’s it…. what have we learned? My take away is the same as it was coming into this test. You should always test each method before saying any method is better than another. Performance depends on many factors that may be different in your environment. Our ultimate goal is to create processes that works best for our environment, so we should never limit our options. If I had to choose a “winning” method for this test, I would choose the FOR XML PATH – sub query method because it consistently has lesser reads and CPU time.

If you want all the files that I used for these tests, you can download them here: http://cid-6f041c9a994564d8.skydrive.live.com/self.aspx/.Public/Concatenating%20Columns%20Part%202/ConcatenateCols%7C_Pt2.zip

5 comments:

Hi Adam...

I didn't read this blog entry until today, and it was timely because I was playing with FOR XML PATH in preparation for a blog post of my own.

Like you, I started to put together a query using CROSS APPLY because of readability, and my eyes popped out when I saw your charts on the reads and CPU time and how horribly the CROSS APPLY approach performed.

In looking at the query plan for the subquery method vs the CROSS APPLY method, it looks like the optimizer is "smarter" in processing the subquery method because it does the GROUP BY on SomeID first, and THEN it will execute the FOR XML subquery for each of those already-distinct-and-grouped SomeIDs. For your 1,000,000 row table, your random generation of SomeID created 250,000 distinct values, so it only executed the FOR XML subquery 250,000 times.

On the other hand, the CROSS APPLY method will execute the FOR XML for EVERY SINGLE ONE of those 1,000,000 rows.

You can see in your statistics that the subquery method took a quarter of the time (and reads) of the CROSS APPLY method, simply because it only did a quarter of the FOR XML executions that CROSS APPLY did.

Thanks for the research... Very enlightening post!

Thanks Brad!! I was a little surprised how differently the subquery method oppose to the cross apply method. It is such a shame because the cross apply method looks so much cleaner :^)

Hi Adam...

Just to follow up...

The CROSS APPLY query can be reconstructed to get rid of the GROUP BY and instead use a derived table in the FROM clause to only pull out the DISTINCT SomeID's:

(Sorry if the formatting doesn't come out right)

SELECT

t1.SomeId,

STUFF(t2.x_id,1,1,'') AS Cols

FROM (SELECT DISTINCT SomeID FROM dbo.[TestData]) t1

CROSS APPLY (

SELECT ',' + t2.SomeCode

FROM dbo.[TestData] t2

WHERE t2.SomeId = t1.SomeId

FOR xml PATH('')

) AS t2 (x_id)

This has identical performance to the non-CROSS-APPLY method.

So we don't have to discount CROSS APPLY altogether.

I believe you may also know about the way of dbx file repair

Thiis is awesome

Post a Comment