Have you ever had a query that runs perfectly fine one day and the then all of a sudden starts getting bombarded with blocking transactions? Believe it or not this is not than uncommon an occurrence and more interestingly can occur when no changes occur in the schema at all! Unbeknownst to most, you are susceptible to an imaginary data distribution tipping point that can go south at any point in time, if your application creates a specific type of workload. Let’s dig deeper to find out what causes this problem.

I will start off by creating some sample data.

USE [tempdb] GO IF EXISTS(SELECT 1 FROM sys.tables WHERE NAME = 'TestData') BEGIN DROP TABLE dbo.[TestData]; END GO CREATE TABLE dbo.TestData( RowNum INT PRIMARY KEY CLUSTERED, SomeBit INT, SomeCode CHAR(2) ); GO INSERT INTO dbo.TestData SELECT TOP 5000 ROW_NUMBER() OVER (ORDER BY t1.NAME) AS RowNumber, CASE WHEN ROW_NUMBER() OVER (ORDER BY t1.NAME) %5 = 0 THEN 1 ELSE 0 END AS SomeBit, 'A' AS SomeCode FROM Master.dbo.SysColumns t1, Master.dbo.SysColumns t2 GO

Nothing new here, just a table with some data. Now I will begin a transaction and run a simple UPDATE statement.

BEGIN TRANSACTION UPDATE dbo.TestData SET SomeCode = 'B' WHERE somebit = 0

Now run a simple select statement against the table in a new query window.

SELECT RowNum FROM dbo.TestData WHERE RowNum = 1000 /* RowNum ----------- 1000 */

The query returned a resultset, just as we thought it would. What I wanted to show here is that we currently do not have a blocking problem and users can still access the rows that do not have a SomeBit value of 0. SQL Server will try to take the lowest or most granular lock possible when satisfying a query, such that other queries can still do what they need to. Obviously there are limitations to this and SQL Server reacts differently based on system responsiveness and pressures. You can verify that you cannot access a row with a SomeBit value of 0 by changing the predicate to a number that is not divisible by 5.

Okay…. big deal…. you probably already know this, but lets suppose that your manager tells you to migrate data from an existing system into this table. The flat file has a measly 3000 rows is it, so its overall impact should really have no implications on our system right???? Let’s find out. Please note that this problem can manifest itself by hitting a tipping point of data also… Meaning it does not take a huge influx of data to cause this problem, and this is why this problem can appear seemingly out of nowhere.

I will load the data with the same insert statement to mimic our data migration.

INSERT INTO dbo.TestData SELECT TOP 3000 CASE WHEN ROW_NUMBER() OVER (ORDER BY t1.NAME) %5 = 0 THEN 1 ELSE 0 END AS SomeBit, 'A' AS SomeCode FROM Master.dbo.SysColumns t1, Master.dbo.SysColumns t2 GO

Now let’s run our same update transaction again.

UPDATE dbo.TestData SET SomeCode = 'B' WHERE somebit = 0

Now run the query below in a new window.

SELECT RowNum FROM dbo.TestData WHERE RowNum = 1000

What you should see is the query is now blocked. Keep in mind that nothing changed on this server, except new data was inserted into the table. Any ideas why this problem is now occurring? If you haven’t figured it out yet, this problem is caused by lock escalation, http://msdn.microsoft.com/en-us/library/ms184286.aspx. When SQL Server meets certain thresholds or memory pressure exists, SQL Server will escalate locks. Lock escalation unfortunately goes from very granular locks to not so granular locks. Lock escalation will go straight to a table lock from a rid/key or page lock. What does this mean? It means that SQL Server can save memory by acquiring a less granular lock, oppose to a lot of granular locks. You can look at the transaction locks for each of the UPDATE statements to verify lock escalation is occurring.

Note: I removed intent locks as those locks from the resultset.

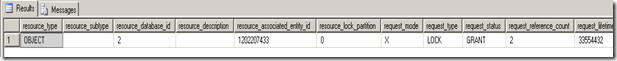

SELECT * FROM sys.[dm_tran_locks] WHERE [request_session_id] = xx AND [request_mode] = 'X' AND [request_mode] NOT LIKE 'I%'

Initial Update Query

Lock Escalated Update Query

If you run a lot of big DML transactions in your environment and still require concurrency, you may want to pay careful attention to lock escalation; otherwise, you may experience an abnormally large number of blocks. While lock escalation is great in most cases, in others it is less than ideal.

Here are the thresholds as described by BOL, http://msdn.microsoft.com/en-us/library/ms184286.aspx

Lock Escalation Thresholds

Lock escalation is triggered when lock escalation is not disabled on the table by using the ALTER TABLE SET LOCK_ESCALATION option, and when either of the following conditions exists:

-

A single Transact-SQL statement acquires at least 5,000 locks on a single nonpartitioned table or index.

-

A single Transact-SQL statement acquires at least 5,000 locks on a single partition of a partitioned table and the ALTER TABLE SET LOCK_ESCALATION option is set to AUTO.

-

The number of locks in an instance of the Database Engine exceeds memory or configuration thresholds.

If locks cannot be escalated because of lock conflicts, the Database Engine periodically triggers lock escalation at every 1,250 new locks acquired.

Now that we have identified the problem, what can we do to fix it? There are a number of options that can be used to solve this problem. One solution is to use the nolock hint in your query or the read uncommited isolation level. This particular solution is not recommend for all OLTP environments and should only be implemented with careful consideration. The nolock hint and the read uncommitted isolation level can return inconsistent data. If and only if this is okay, should you consider this as a solution. Another solution is to use the read committed snapshot isolation level or the snapshot isolation level. Both of these solutions require tempdb overhead, but do return transactional consistent data. You can read more about these isolation levels here, http://msdn.microsoft.com/en-us/library/ms189122.aspx. The other approach is to remove lock escalation. You can remove lock escalation at the instance level (trace flags 1211 and 1224) or at the table level in SQL Server 2008, using the ALTER TABLE statement. Obviously, removing lock escalation should be carefully thought out and tested. For more information on these trace flags, please visit the storage engine’s blog http://blogs.msdn.com/b/sqlserverstorageengine/archive/2006/05/17/lock-escalation.aspx.

There you have it. I have shown you how simply adding data to your table can literally make a fast application a blocking nightmare overnight. Before you go off and start adding nolock hints or changing your isolation level, please understand that you should only take these steps if you are experiencing this problem. The reality is an OLTP system should not be holding onto more than 5000 (a lock escalation tipping point) locks because transactions should be short and efficient. If you are experiencing this problem, your database is probably OLAP, you are missing indexes, or you queries are not typical OLTP transactions. For example, you probably have large DML transactions and users trying to query the table concurrently.

Until next time happy coding.

4 comments:

you can quickly reproduce the sql 2000 recover database process with another utility for data recovery

We're glad to know more and read this great review. I'm happy to share with cheap quotes insurance that is supported by best auto insurance companies. Customer could save on homeowners insurance rates which allow customers to get cheap policy.

Outstanding blog thanks for sharing such wonderful blog with us ,after long time came across such knowlegeble blog. keep sharing such informative blog with us.

Check out : machine learning tution in chennai

artificial intelligence and machine learning course in chennai

best training insitute for machine learning

Thank you so much for sharing such an interesting blog! I found the information provided to be really valuable and informative. It's great that you also gave me the chance to express my opinion. I truly appreciate the hard work you've put into creating this content. I'm eagerly looking forward to reading your next blog post, and I'm sure it will be just as fantastic as this one! Keep up the excellent work!

Here is sharing some data analytics Salesforce CPQ overview concepts may be its helpful to you.

Salesforce cpq training

Post a Comment