I was reading an article last week and saw a snippet of code that claimed to make fully optimized code, more efficient. The article itself was not written by the original content creator. With a little investigative work, I came across the website and author who first developed the presented performance tuning techniques. The website that hosted the content is http://www.sqlworkshops.com/ and the original author is Ramesh Meyyappan, rmeyyappan@sqlworkshops.com. Ramesh is SQL Server consultant and professional trainer, who has worked previously with Microsoft and has real world knowledge of performance tuning techniques. On the SQLWorkshops website, Ramesh offers 3 free webcasts that demonstrate how to performance tune queries. He focuses on query memory, parallelism, MAXDOP (MAX Degree of parallelism), and wait stats. I recommend that all database professionals view these webcasts. There is a lot of great content presented in these webcasts that you cannot find in a classroom. In this post, I will take a few of the methods provided and explain them. I will also provide additional methods that were not covered in the webcast. On a side note, if you have not figured out the challenge Ramesh presented, I will show you a few of the solutions I came up with, in my next installment.

The first optimization technique I will explore deals with data type mismanagement. The two data types I will be evaluating in this post are VARCHAR and CHAR. There are a lot of do’s and don’ts regarding these two data types, on the Internet. I will not engage the pros and cons in this post. The main point is a performance penalty can occur, if the wrong data type is chosen. You should always choose a data type that most closely resembles the size and structure of your data. Experienced database professionals know to use data types that closely resemble the data being stored; however, inexperienced database professionals fail to see the long term impact of poor design choices. Unfortunately, it is quite common for database professionals to use a catch all data type like VARCHAR(8000) or CHAR(2000), without fully understanding the data itself. In most cases, choosing the wrong data type results in wasted storage and slower query performance, as I will show in this post. I will get this party started by creating my sample table and data.

USE [tempdb] GO SET NOCOUNT ON GO IF EXISTS(SELECT 1 FROM sys.tables WHERE NAME = 'TestData') BEGIN DROP TABLE dbo.[TestData]; END GO CREATE TABLE dbo.TestData( RowNum INT PRIMARY KEY, SomeId INT, SomeCode CHAR(2000) ); GO ;WITH L0 AS (SELECT 1 AS C UNION ALL SELECT 1) --2 rows ,L1 AS (SELECT 1 AS C FROM L0 AS A, L0 AS B) --4 rows (2x2) ,L2 AS (SELECT 1 AS C FROM L1 AS A, L1 AS B) --16 rows (4x4) ,L3 AS (SELECT 1 AS C FROM L2 AS A, L2 AS B) --256 rows (16x16) ,L4 AS (SELECT 1 AS C FROM L3 AS A, L3 AS B) --65536 rows (256x256) ,L5 AS (SELECT 1 AS C FROM L4 AS A, L4 AS B) --4,294,967,296 rows (65536x65536) ,Number AS (SELECT row_number() OVER (ORDER BY (SELECT 0)) AS N FROM L5) INSERT INTO dbo.TestData SELECT N AS RowNumber, ABS(CHECKSUM(NEWID()))%1000000+1 AS SomeId , REPLICATE('a',ABS(CHECKSUM(NEWID())) % 2000 + 1) AS SomeCode FROM Number WHERE [N] <= 50000 GO UPDATE STATISTICS dbo.[TestData] WITH FULLSCAN; GO

The first thing I want to illustrate is the average size of our SomeCode column. We are using a CHAR(2000) data type; however, we are storing much less data than this on average.

SELECT AVG(LEN(SomeCode)) AS AvgLen FROM dbo.TestData WHERE [RowNum] < 35000 /* AvgLen ----------- 997 */

As you can see from the query above the average SomeCode length is 997 bytes; however, we are storing 2000 bytes per row. This means we are consuming 50% more storage, per row. Using a char data type is typically faster than using a VARCHAR data type; however, the cost of storing CHAR data types usually offset the performance gain. If I were to choose a VARCHAR(2000) data type, I would consume less storage, which translates into faster table scans, smaller tables, faster backups, faster restores etc…. Performance will increase because less storage, is directly correlated to less pages. The bottom line is less is faster.

The query I will be demonstrating returns a range of rows, where the sort order is different than the primary clustered key sort. The IO stats will be the same across all variants of this query, so the counter will not illustrate a proper delta. I will be using elapsed time to illustrate the delta between queries, with varying predicates.

Let’s turn on STATISTICS TIME and NOCOUNT.

SET NOCOUNT ON; GO SET STATISTICS TIME ON; GO

Now run the following query. Make sure to make query results go to text. (Note: I assign columns to local variables to avoid results being printed). Run the query a couple of times to warm the cache and get a baseline CPU and elapsed time.

DECLARE @RowNum INT,@SomeInt INT, @SomeCode CHAR(2000) SELECT @RowNum = [RowNum], @SomeInt = [SomeId], @SomeCode = [SomeCode] FROM dbo.[TestData] WHERE [RowNum] < 3000 --3000 is in memory and 3500 sort in tempdb ORDER BY SomeId

The query is very fast and performs within our SLA of 2 seconds. Let’s see what happens when I increase the number of rows in the predicate to 3500.

DECLARE @RowNum INT,@SomeInt INT, @SomeCode CHAR(2000) SELECT @RowNum = [RowNum], @SomeInt = [SomeId], @SomeCode = [SomeCode] FROM dbo.[TestData] WHERE [RowNum] < 3500 --3000 is in memory and 3500 sort in tempdb ORDER BY SomeId

Wow… by returning 5000 more rows, the query is over 14 times slower! You may be thinking that the speed difference is neglible, but on production systems the elapsed time delta can be quite extensive, at which point performance is a real problem. Let’s check the execution plan for each query side by side.

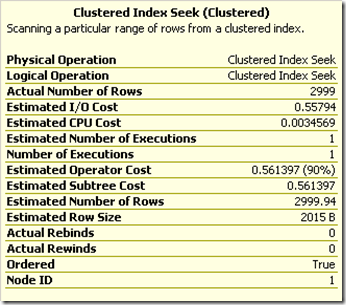

Well that is not much help….. both of our query plans look good. There is really not much you can do to make the query perform better. The index is doing a great job at returning the data., but that pesky sort is degrading performance. The first thing I need to investigate is the detail of the query plan.The first query plan attribute I will look at is the clustered index seek estimated row size. The estimated row size is derived from statistics and some internal calculations.

The row size is estimated to be 2015 bytes for each row in the clustered index seek. The row size is derived from native data types. For example, we are using a CHAR(2000) and two integer columns. This makes our row size 2000 (1 byte per char)+ 4 (int) + 4 (int) = 2008. As you can see, 2008 is less than 2015. The additional 7 bytes are associated to row overhead and the NULL bitmap. The sort operator is also estimated a row size of 2015. All of the estimates play a part in dictating how much memory will be granted to the query. Next I am going to put the query into a while loop, so I can see the memory grants. Open two new query windows and paste the below code. Make sure to change the RowNum to 3000, in one of the query windows. Take note of the session id of each of the windows.

WHILE 1 = 1 BEGIN DECLARE @RowNum INT,@SomeInt INT, @SomeCode CHAR(2000) SELECT @RowNum = [RowNum], @SomeInt = [SomeId], @SomeCode = [SomeCode] FROM dbo.[TestData] WHERE [RowNum] < 3500 --SWITCH OUT TO 3500 TO 3000 ORDER BY SomeId END

In a completely new window, paste the below DMV query. This query will return the memory grants for each of the sessions. The key columns from this query are Max_Used_Memory and Granted_Memory. If the Max and Granted memory are the same, it is likely that the query is consuming all the RAM granted to the query and the optimizer has to rely on tempdb to help with some operations.

SELECT [granted_memory_kb],[required_memory_kb],[max_used_memory_kb] FROM sys.dm_exec_query_memory_grants WHERE [session_id] = 56 SELECT [granted_memory_kb],[required_memory_kb],[max_used_memory_kb] FROM sys.dm_exec_query_memory_grants WHERE [session_id] = 60

All the execution plan details look fine, so I will dig a bit deeper into tempdb to unearth the real problem. Let’s have a look at the tempdb IO stats to see if either query is using tempdb to perform any operations.

I will start by executing the “fast” query.

SELECT * FROM sys.[dm_io_virtual_file_stats](2,1) --FAST DECLARE @RowNum INT,@SomeInt INT, @SomeCode CHAR(2000) SELECT @RowNum = [RowNum], @SomeInt = [SomeId], @SomeCode = [SomeCode] FROM dbo.[TestData] WHERE [RowNum] < 3000 --3000 is in memory and 3500 sort in tempdb ORDER BY SomeId SELECT * FROM sys.[dm_io_virtual_file_stats](2,1) GO

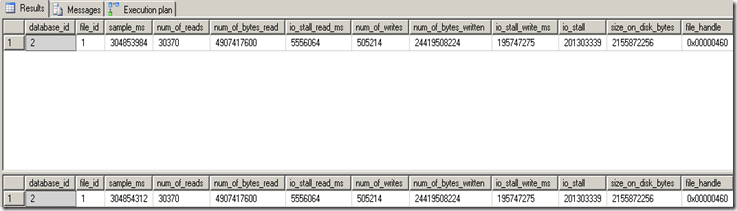

The important columns to look at are num_of_reads, num_of_bytes_read, num_of_writes, num_of_bytes_written. The number of reads and writes before and after the query are exactly the same. This means the optimizer did not have to use tempdb operations. Next, I will execute the slow query, which uses a predicate of less than 3500.

SELECT * FROM sys.[dm_io_virtual_file_stats](2,1) --FAST DECLARE @RowNum INT,@SomeInt INT, @SomeCode CHAR(2000) SELECT @RowNum = [RowNum], @SomeInt = [SomeId], @SomeCode = [SomeCode] FROM dbo.[TestData] WHERE [RowNum] < 3500 --3000 is in memory and 3500 sort in tempdb ORDER BY SomeId SELECT * FROM sys.[dm_io_virtual_file_stats](2,1) GO

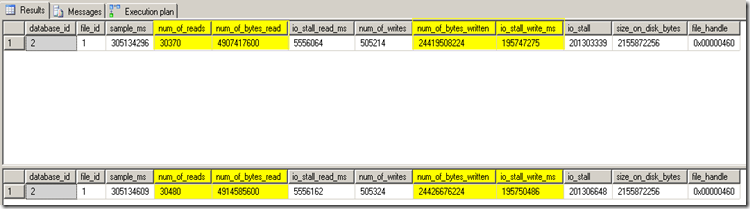

By George…… I think we have something!!! Why does the second query have more reads and writes into tempdb? The answer is the sort operation is being done in memory for the “fast” query and the sort is being done in tempdb for the “slow” query. Another method you can use to identify tempdb sort operations is Sort Warninigs. When tempdb is used to perform sort operations the sort warnings counter will display it. Below is a screenshot of the counter and the description.

Now that I have identified the problem, how do I solve it? There are a couple of ways to solve this problem. One solutions is to increase the estimated row size, so the optimizer grants more memory to the query, which in turn allows the sort to fit into memory. The second option is to decrease the row size, so the sort fits into memory. Let’s see this in action.

DECLARE @RowNum INT,@SomeInt INT, @SomeCode VARCHAR(2000) SELECT @RowNum = [RowNum], @SomeInt = [SomeId], @SomeCode = CAST([SomeCode] AS VARCHAR(2000)) FROM dbo.[TestData] WHERE [RowNum] < 3500 --3000 is in memory and 3500 sort in tempdb ORDER BY SomeId

This method uses cast to convert the CHAR(2000) column to a VARCHAR(2000). Casting the column to a VARCHAR(2000) helps to reduce the estimated row size. The estimated row size of a VARCHAR column is calculated as 50% of the total size. The estimated row size is 50% of the VARCHAR column because 50% is a safe estimate because it really does not know how full the row is. Generally speaking, VARCHAR columns never use 100% of a variable column anyway, so this helps SQL Server save resources. In our case, a VARCHAR(2000) is estimated to be approximately 1000 bytes. Let’s have a look at the execution plan.

Unfortunately the conversion itself is not enough to optimize this query. Let’s try to trim the CHAR column prior to the cast, so the row size is decreased. The interesting thing here is the optimizer still reports the same estimated row size; however, the sort now occurs in memory. This will help the sort fit into memory because the estimated row size is significantly less than 1019 for each row, so the optimizer is unintentionally giving extra memory to process this query. Let’s see this in action.

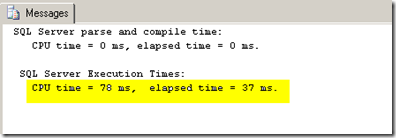

DECLARE @RowNum INT,@SomeInt INT, @SomeCode VARCHAR(2000) SELECT @RowNum = [RowNum], @SomeInt = [SomeId], @SomeCode = RTRIM(CAST([SomeCode] AS VARCHAR(2000))) FROM dbo.[TestData] WHERE [RowNum] < 3500 --3000 is in memory and 3500 sort in tempdb ORDER BY SomeId

That’s more like it. If you run the query, with sys.dm_io_virtual_stats, you will see the query no longer uses tempdb to sort the rows. This method works works well for queries where the predicate is < 3500. If the returned range is significantly larger than 3500 rows, it is likely that the sort would spill into tempdb. The idea here is to cater to every day usage patterns and let the exceptions take longer. It should also be noted that if the CHAR(2000) column is nearly full for every row, the RTRIM method will not have the same impact. When the RTRIM method is not cutting the mustard, it is time to go with another option…. such as bloating the estimated row size.

This is the solution presented in Ramesh’s webcast. To do this we will use the same cast conversion, to introduce a compute scalar into the query plan, but this time we will make the value larger. When choosing a larger number it is important to use a number that is not too large, as this could have an adverse effect on query performance, or other queries running on the server. More information can be obtained via the webcast.

DECLARE @RowNum INT,@SomeInt INT, @SomeCode VARCHAR(4200) SELECT @RowNum = [RowNum], @SomeInt = [SomeId], @SomeCode = CAST([SomeCode] AS VARCHAR(4200)) FROM dbo.[TestData] WHERE [RowNum] < 3500 --3000 is in memory and 3500 sort in tempdb ORDER BY SomeId

Note: I am using SQL Server 2008 to perform my tests, but I have tested both of these solutions in SQL Server 2005 and obtained similar results.

There you have it. I have done an introduction to performance tuning queries that seemingly have an ideal execution plan. A lot of the concepts presented here were taken from http://sqlworkshops.com . SQLWorkshops.com provides 3 webcasts that present a wealth of performance tuning tips that will benefit all levels of database professionals. Stay tuned for my next post!!! I will be dissecting more query optimization techniques including two solutions to the TOP 101 challenge.

Until next time, happy coding.

5 comments:

Excellent stuff, Adam... I'm looking forward to your next post.

Fantastic post. Thanks.

Thank you for writing about useful review. It needs to know that mobile software development could help in your business by installing custom development software products.

Thanks for well done posts. Let me mention about home insurance cost for USA policy owners homeowners protection companies. Save on free online rates on homeowners insurance.

Thank you for posting this useful review. You have nice possibility to choose online affiliate program. The most common casino affiliate programs such as 32 Red Affiliate and great poker rooms such as redbet poker affiliates.

Post a Comment