Below is a list of myths that I have encountered and will be discussing in this series.

Table Variables do not exist in TempDB

Lets start with one of my favorite myths. There is a very common misconception that table variables do not exist in TempDB, but only in memory. This is absolutely false. Table variables exist in memory, when they are small enough to fit, but they always consume storage in TempDB and make entries into the TempDB log.

The example below demonstrates how you can view the table makeup in sys.tables.

--Make the master db the current db USE [master] GO --Declare a table variable DECLARE @t TABLE( Id INT, Col CHAR(1) ); --Get Table Variable Definition SELECT t.NAME,c.name,ty.name, [c].[max_length] FROM tempdb.sys.tables t INNER JOIN tempdb.sys.columns c ON [t].[object_id] = [c].[object_id] INNER JOIN tempdb.sys.types ty ON [c].[system_type_id] = [ty].[system_type_id] WHERE t.name LIKE '#%'

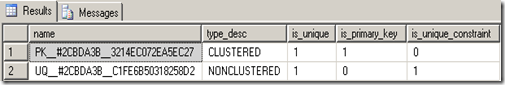

Table variables cannot have indexes

The next myth also deals with table variables. It is often thought that table variables cannot have indexes, which is absolutely false. Table variables can have indexes, if you specify them at the time of creation. The only restriction is that you cannot create non-unique indexes. It is important to remember that even though an index exists on the table variable, the optimizer still does not maintain statistics on table variables. This means the optimizer will still assume one row, even if a scan is done.

DECLARE @t TABLE( Id INT PRIMARY KEY CLUSTERED, Col CHAR(1) UNIQUE ); --Get Table Variable Definition SELECT i.name, i.type_desc,i.is_unique,i.is_primary_key,i.[is_unique_constraint] FROM tempdb.sys.tables t INNER JOIN tempdb.sys.indexes i ON i.[object_id] = t.[object_id] WHERE t.name LIKE '#%'

Results:

Putting a db in simple recovery stops the log from growing

One of the most misunderstood myths in the SQL Server realm is how the simple recovery model actually works. Some believe that by changing the recovery model to simple the transaction log will not grow. This is wrong on so many levels. If the transaction log were not able to grow, how would any transactions be rolled back? When the recovery model is set to simple the transaction log still has to grow to accommodate large transactions. Let’s have a look at this in action.

SET NOCOUNT ON GO USE [master] GO IF db_id('TestSimpleRecovery') IS NOT NULL BEGIN DROP DATABASE TestSimpleRecovery; END GO --Create DB CREATE DATABASE TestSimpleRecovery; GO --Change Recovery Model To Simple ALTER DATABASE TestSimpleRecovery SET RECOVERY SIMPLE GO --Change the FileGrowth To 100MB ALTER DATABASE TestSimpleRecovery MODIFY FILE(NAME = TestSimpleRecovery_Log,FILEGROWTH=100MB); GO --Switch DB Context USE [TestSimpleRecovery] GO --Get Current Log Size (before XACT) SELECT ((size * 8) / 1024.) / 1024. AS SizeMBsBeforeTransaction FROM sys.[master_files] WHERE type = 1 AND [database_id] = db_id('TestSimpleRecovery') GO --Drop Sample Table IF EXISTS(SELECT 1 FROM sys.tables WHERE NAME = 'TestData') BEGIN DROP TABLE dbo.[TestData]; END GO CREATE TABLE dbo.TestData( RowNum INT, SomeId INT, SomeCode CHAR(2) ); GO DECLARE @i INT SET @i = 1 BEGIN TRANSACTION WHILE @i < 100 BEGIN INSERT INTO dbo.TestData SELECT TOP 1000 ROW_NUMBER() OVER (ORDER BY t1.NAME) AS RowNumber, ABS(CHECKSUM(NEWID()))%2500+1 AS SomeId, CHAR(ABS(CHECKSUM(NEWID()))%26+65) + CHAR(ABS(CHECKSUM(NEWID()))%26+65) AS SomeCode FROM Master.dbo.SysColumns t1, Master.dbo.SysColumns t2 SET @i = @i + 1 END COMMIT TRANSACTION GO --Get Current Log Size (After XACT) SELECT ((size * 8) / 1024.) / 1024. AS SizeMBsAfterTransaction FROM sys.[master_files] WHERE type = 1 AND [database_id] = db_id('TestSimpleRecovery') GO /* SizeMBsBeforeTransaction --------------------------------------- 0.00054931640 SizeMBsAfterTransaction --------------------------------------- 0.09820556640 */

Shrinking a log breaks the log chain

The next myth I will be discussing is shrinking the transaction log. It is often purveyed that shrinking the transaction log breaks the log chain. This is absolutely false! This is a prime example of how confusion can cause misinformation. It is true that backing up the log with the truncate only option breaks the log chain, but this is completely different than shrinking the log. Shrinking the log is used to empty space from a data or log file. Shrinking the log file does not affect the active portion of the log at all, which means the backup chain is fully intact. For more information, you should read this article,

http://msdn.microsoft.com/en-us/library/ms178037.aspx.IF NOT EXISTS(SELECT 1 FROM sys.databases WHERE name = 'ShrinkLog_Test') BEGIN CREATE DATABASE [ShrinkLog_Test]; END GO BACKUP DATABASE [ShrinkLog_Test] TO DISK = 'c:\ShrinkLog_Test.bak' WITH INIT, STATS=10 GO BACKUP LOG [ShrinkLog_Test] TO DISK = 'c:\ShrinkLog_Test.trn' WITH INIT, STATS=10 GO SELECT TOP 1 b.type, b.first_lsn, b.last_lsn FROM msdb..backupset b WHERE b.[database_name] = 'ShrinkLog_Test' ORDER BY [backup_start_date] DESC /* type first_lsn last_lsn ---- --------------------------------------- --------------------------------------- L 41000000005400064 41000000009000001 */ USE [ShrinkLog_Test] GO DBCC SHRINKFILE('ShrinkLog_Test_log',1,TRUNCATEONLY) GO SELECT TOP 1 b.type, b.first_lsn, b.last_lsn FROM msdb..backupset b WHERE b.[database_name] = 'ShrinkLog_Test' ORDER BY [backup_start_date] DESC /* type first_lsn last_lsn ---- --------------------------------------- --------------------------------------- L 41000000005400064 41000000009000001 */ USE master GO RESTORE DATABASE ShrinkLog_Test FROM DISK = 'c:\ShrinkLog_Test.bak' WITH norecovery, REPLACE GO RESTORE log ShrinkLog_Test FROM DISK = 'c:\ShrinkLog_Test.trn' WITH recovery, REPLACE GO /* Processed 168 pages for database 'ShrinkLog_Test', file 'ShrinkLog_Test' on file 1. Processed 3 pages for database 'ShrinkLog_Test', file 'ShrinkLog_Test_log' on file 1. RESTORE DATABASE successfully processed 171 pages in 0.061 seconds (21.780 MB/sec). Processed 0 pages for database 'ShrinkLog_Test', file 'ShrinkLog_Test' on file 1. Processed 5 pages for database 'ShrinkLog_Test', file 'ShrinkLog_Test_log' on file 1. RESTORE LOG successfully processed 5 pages in 0.043 seconds (0.794 MB/sec). */

Backing up the transaction log frees up OS storage>

The next myth is one of the most problematic ones because those who believe this become bedazzled when they backup their transaction log and it does not give the space back to the OS. I would like to point out that I do not recommend shrinking data files unless you absolutely crunched for space. Shrinking the log is the only way to return storage back to the OS.

SET NOCOUNT ON GO USE [master] GO IF db_id('TestSimpleRecovery') IS NOT NULL BEGIN DROP DATABASE TestSimpleRecovery; END GO --Create DB CREATE DATABASE TestSimpleRecovery; GO --Change Recovery Model To Simple ALTER DATABASE TestSimpleRecovery SET RECOVERY FULL GO --Change the FileGrowth To 100MB ALTER DATABASE TestSimpleRecovery MODIFY FILE(NAME = TestSimpleRecovery_Log,FILEGROWTH=100MB); GO BACKUP DATABASE TestSimpleRecovery TO DISK = 'C:\Test.bak' --Switch DB Context USE [TestSimpleRecovery] GO --Get Current Log Size (before XACT) SELECT ((size * 8) / 1024.) / 1024. AS SizeMBsBeforeTransaction FROM sys.[master_files] WHERE type = 1 AND [database_id] = db_id('TestSimpleRecovery') GO --Drop Sample Table IF EXISTS(SELECT 1 FROM sys.tables WHERE NAME = 'TestData') BEGIN DROP TABLE dbo.[TestData]; END GO CREATE TABLE dbo.TestData( RowNum INT, SomeId INT, SomeCode CHAR(2) ); GO DECLARE @i INT SET @i = 1 BEGIN TRANSACTION WHILE @i < 100 BEGIN INSERT INTO dbo.TestData SELECT TOP 1000 ROW_NUMBER() OVER (ORDER BY t1.NAME) AS RowNumber, ABS(CHECKSUM(NEWID()))%2500+1 AS SomeId, CHAR(ABS(CHECKSUM(NEWID()))%26+65) + CHAR(ABS(CHECKSUM(NEWID()))%26+65) AS SomeCode FROM Master.dbo.SysColumns t1, Master.dbo.SysColumns t2 SET @i = @i + 1 END COMMIT TRANSACTION GO --Get Current Log Size (After XACT) SELECT ((size * 8) / 1024.) / 1024. AS SizeMBsAfterTransaction FROM sys.[master_files] WHERE type = 1 AND [database_id] = db_id('TestSimpleRecovery') GO BACKUP log TestSimpleRecovery TO DISK = 'c:\test.trn' GO --Get Current Log Size (After Log Backup) SELECT ((size * 8) / 1024.) / 1024. AS SizeMBsAfterBackup FROM sys.[master_files] WHERE type = 1 AND [database_id] = db_id('TestSimpleRecovery') GO /* Processed 168 pages for database 'TestSimpleRecovery', file 'TestSimpleRecovery' on file 1. Processed 2 pages for database 'TestSimpleRecovery', file 'TestSimpleRecovery_log' on file 1. BACKUP DATABASE successfully processed 170 pages in 0.361 seconds (3.677 MB/sec). SizeMBsBeforeTransaction --------------------------------------- 0.00054931640 SizeMBsAfterTransaction --------------------------------------- 0.09820556640 Processed 1407 pages for database 'TestSimpleRecovery', file 'TestSimpleRecovery_log' on file 1. BACKUP LOG successfully processed 1407 pages in 3.002 seconds (3.659 MB/sec). SizeMBsAfterBackup --------------------------------------- 0.09820556640 */

I am trying to keep this series manageable, so I will stop here and pickup the remaining items in part 2, of this series. If you have any myths that you would like to see busted or have myths you would like to share, please feel free to leave me comments. Stay tuned part 2!

Until next time happy coding.

6 comments:

Good stuff.

Typo: "some many" should be "so many"

Thanks Brian. I dont know how I could have missed that one.

Thanks,

Adam

Awesome!

Can not wait to read the part 2.

Thank you for sharing with useful survey. It necessary to know that mobile app development could help in your industry by installing customized software products.

Thanks for great posts. Let me mention about cheap home insurance that are from homeowners insurance companies. Compare free rates on homeowners insurance.

Thanks for sharing with us this useful review. You have nice chance to choose casino affiliate programs. The most common casino programs such as affiliates united program and great poker rooms such as redbet affiliates.

Post a Comment