SSRS is becoming a highly scalable and performant reporting solution, for most environments. SSRS is becoming more and more popular because of its price tag. It is really tough to compete with free. The only cost consideration that needs to be made is SQL licensing. With the uproar with BI and Share Point SSRS is becoming the premier reporting platform. As database professionals, we need to consider the performance consequences of all the code that executes on our production/reporting databases. SSRS is a great reporting tool but if left unchecked can be quite problematic. This post will strictly focus on the eternal question…. should I use a stored procedure, or should I use embedded SQL. I will be addressing this question from the DBA perspective, which is often neglected.

I will be very frank and say that I really do not see any benefits to using embedded TSQL from the DBA perspective. Embedded TSQL has a lot of cons that should deter any database professional from using it. So what are some of the problems in using embedded TSQL? Let’s name a few of the cons.

The Cons Of Embedded TSQL:

- Harder to manage security

- Report may break when schema changes

- Difficult to make changes to embedded TSQL

- Causes procedure cache to bloat

Note: I did not list the pros to using stored procedures, but the list is the inverse of the Embedded TSQL list.

As you can see, there are a lot of problems in choosing to use embedded TSQL. The first con to using embedded TSQL is security. It is extremely difficult to manage security without the use of stored procedures. When code logic is encapsulated in stored procedure, the DBA can easily apply permission to the stored procedure, without elevating permissions to the underlying objects. If embedded TSQL is used, the person executing the report must have underlying permissions to all objects referenced in the embedded TSQL, which makes maintaining embedded TSQL complicated because you really have no idea what code is actually being executed against your database. To get an idea of what permissions are needed to execute a report, you have to open the report or run a trace to get the TSQL.

Embedding TSQL in a SSRS report also can become a problem when the underlying database schema changes. The SSRS report has no dependencies on the database schema and any change can break a report. In this scenario, there may be additional downtime just to figure out and fix the problem. In most cases, the DBA has no idea that a schema change broke the report. Typically the problem does not surface until customers start complaining, which leads to the problem of changing the embedded TSQL. This is not to say that when stored procedures are used reports will not break, but SSMS offers better dependency checks to determine what objects are dependant, which decreases the likelihood of an report outage.

One of the biggest problems with embedded TSQL is modifying the TSQL code. To modify the embedded TSQL the developer has to download the report RDL and then make the change. Once the change has been made, the developer has to redeploy the report. These steps require a lot of time to implement and additional downtime is incurred. If a stored procedure is used, the only downtime incurred is the time taken to modify the stored procedure. Another benefit of a stored procedure is a developer or DBA can more easily test the report within the confines of SSMS, instead of having to use BIDS.

The absolute worse aspect of using embedded TSQL is it can bloat the procedure cache, which can severely degrade server performance. SSRS tries and does a good job at parameterizing most TSQL, but there are certain aspects of SSRS that cause the procedure cache to bloat. This is where I want to focus most of my attention because this is often the most overlooked aspect of embedded TSQL. There are two scenarios that I am currently aware of that can directly cause the procedure cache to bloat. The first scenario occurs when a multi-value parameter is used in conjunction with the IN clause. Multi-value parameters are treated a differently than standard parameters, in SSRS. When a multi-value parameter is used with the IN clause the SSRS engine submits the query to SQL Server using literal values in the IN clause. When literal values are used in the IN clause, the query is not considered parameterized, so the optimizer has to create a new query plan, unless an exact binary match already exists. Let’s have a look to see this example in action.

First let’s create the sample table and populate the table with data.

SET NOCOUNT ON

GO

USE [tempdb]

GO

IF object_id('tempdb.dbo.SSRS_Cache_Bloat') IS NOT NULL

BEGIN

DROP TABLE dbo.SSRS_Cache_Bloat;

END

GO

CREATE TABLE SSRS_Cache_Bloat(

ID INT IDENTITY(1,1) PRIMARY KEY CLUSTERED,

ColA VARCHAR(10),

ColB BIT

);

INSERT INTO dbo.SSRS_Cache_Bloat VALUES ('Adam',0);

INSERT INTO dbo.SSRS_Cache_Bloat VALUES ('Bob',1);

INSERT INTO dbo.SSRS_Cache_Bloat VALUES ('Chad',0);

INSERT INTO dbo.SSRS_Cache_Bloat VALUES ('Dave',1);

GO

IF object_id('tempdb.dbo.Lookup_Vals') IS NOT NULL

BEGIN

DROP TABLE dbo.Lookup_Vals;

END

GO

CREATE TABLE dbo.Lookup_Vals(

ColA CHAR(4)

);

INSERT INTO dbo.Lookup_Vals VALUES ('Adam');

INSERT INTO dbo.Lookup_Vals VALUES ('Bob');

INSERT INTO dbo.Lookup_Vals VALUES ('Chad');

INSERT INTO dbo.Lookup_Vals VALUES ('Dave');

Next create a new SSRS report. Create two data sets and a parameter, as defined below.

DataSet1 is the main report dataset.

select Id, ColA, ColB from SSRS_Cache_Bloat where ColA in(@var)

DataSet2 is the parameter dataset. You will need to make sure your parameter derives its values from this dataset.

select colA from dbo.Lookup_Vals

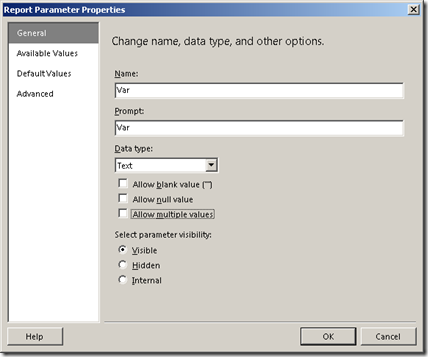

Make sure the parameter is set to use multi-value parameters, as shown below.

Once you have all the data sets configured. Preview the report in SSRS. When selecting your parameter values make sure to select more than one value. Here is a screenshot of my report.

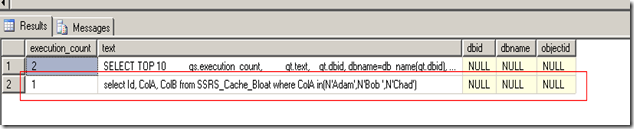

As you can see the multi-value parameter returned a row for all three parameter values. Let’s look at the query execution stats to see what SQL actually executed.

SELECT TOP 10

qs.execution_count,

qt.text,

qt.dbid, dbname=db_name(qt.dbid),

qt.objectid

FROM sys.dm_exec_query_stats qs

cross apply sys.dm_exec_sql_text(qs.sql_handle) as qt

ORDER BY qs.[last_execution_time] DESC

You should see an entry similar to the screenshot below.

As you can see, SSRS submitted TSQL with literal values specified in the IN clause. What do you think will happen, if we preview the report again with different parameter values? If you guessed that we will get a completely new plan, you would be right.

Can you imagine what happens when you have hundreds or thousands of differing options and hundreds or thousands of users? The plan cache will take a beating because so many plans with differing values will have to be stored. When so many plans exist in the procedure cache, you have less memory to store data pages in cache. Ultimately nothing good comes out of having a bloated procedure cache. Multi-value parameters are not the only cause of bloating the cache. The next scenario that bloats the procedure cache is using hard coded values in a parameter list.

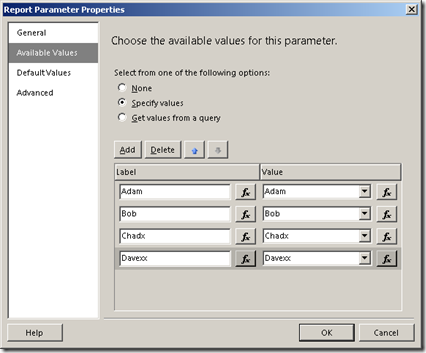

Using hard coded values in a parameter list seems like a harmless gesture, but the reality is the SSRS engine guesses at the size of the string which directly impacts whether an existing plan can be used. In our SSRS report change the dataset that is used to query the Lookup table to use fixed values, as shown below.

Note: I added x to some of the values so that the length varies among strings.

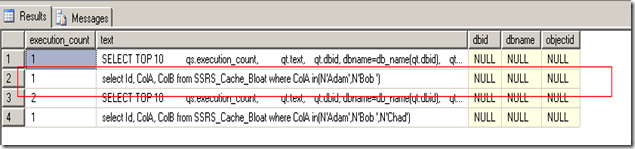

Let’s preview the report, to see what happens. I used the values “Adam” for the first execution and “Bob” for the second execution. You should see entries like below in your procedure cache.

The primary difference between the two execution plans is the size of the declared variable. In the case of “Adam” the variable was declared as a nvarchar(4) and for “Bob” a nvarchar(3). Because the size is different a new query plan was created.

These are the couple of scenarios that I am currently aware of that cause the plan cache to behave in this manner. I am sure there are other quirks that can cause this problem. So the big question left on the table is…. how do I fix this problem? The answer is to use stored procedures.

I will start by fixing scenario two. Create the following procedure in the database.

CREATE PROCEDURE usp_Fix_Scenario2(@var varchar(10))

AS

BEGIN

SELECT id,ColA,[colB]

FROM dbo.SSRS_Cache_Bloat

WHERE ColA IN(@var)

END

GO

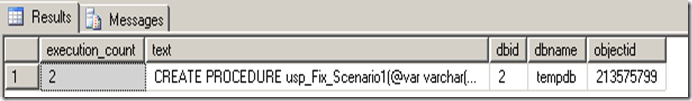

You will see a single entry in the procedure cache, for both “Adam” and “Bob”. As you can see the execution count is at two, which means the optimizer reused an existing plan.

Now let’s fix scenario one.

CREATE PROCEDURE usp_Fix_Scenario1(@var varchar(100))

AS

BEGIN

DECLARE @x XML

SET @x = '<i>' + REPLACE(@var,',','</i><i>') + '</i>'

SELECT id,ColA,[colB]

FROM dbo.SSRS_Cache_Bloat

WHERE ColA IN(

SELECT x.i.value('.','varchar(10)')

FROM @x.nodes('/i') x(i)

)

END

GO

As you can obviously see using stored procedures is by far a best practice. Stored procedures allow the greatest security, flexibility, manageability, and performance. I really cannot see a reason to use embedded TSQL at all and hopefully at this point you feel the same way. All-in-all, we learned a valuable lesson in this post. You cannot always trust that easier is better even if Microsoft says it is okay. SSRS is tool written with the developer in mind and the DBA perspective is neglected. If DBAs knew what SSRS is really doing behind the scenes, embedded TSQL would be outlawed. We as DBAs have to know and expose potential performance problems for all applications including Microsoft applications. I hope that my exposing these flaws within SSRS, will help you and your environment adhere to better SSRS practices.

Until next time, happy coding.

8 comments:

that’s good, but you can try another way of excel file repair

Couple of other reasons why we should use sprocs - reusability - i.e. multiple reports can call on same sproc *and* because of this we'll have consistency in the different reports

wonderful piece of information, I had come to know about your blog from my friend Nandu , Hyderabad, I have read at least 7 posts of yours by now, and let me tell you, your website gives the best and the most interesting information. This is just the kind of information that I had been looking for, I'm already your RSS reader now and I would regularly watch out for the new posts, once again hats off to you! Thanks a ton once again, Regards, Difference sql and tsql

very instructive, opens a lot of doors ;-).

BTW I did it with a function wich splits my string and return a table, it is much faster than the xml way. 44 secs for 1447 rows in xml versus 33 secs for 1447 rows the split way.

It depends on the environment. in a small company:

•Harder to manage security

Our env. doesn't store protected info and we manage security in the SSRS report manager

•Report may break when schema changes

If I add a column, the report still works. If I remove or rename column, I still have to update rdl

•Difficult to make changes to embedded TSQL

Tsql is Tsql. Either in SP or in property of the report. The same

•Causes procedure cache to bloat

We run 100 reports daily and we have plenty of memory to keep all QEPs in a cache.

One of the questions I have is if the SSRS portal is on a different server than the database, will the use of embedded tsql cause heavier performance on the ssrs server whereas a stored procedure will do the crunching on the database server before returning the result sets?

"Report may break when schema changes"

A stored proc can break when the schema changes, so I don't get this argument.

"Difficult to make changes to embedded TSQL"

Why? I've never had a problem with it.

Frankly, I think having everything in one place (in the report) is far easier. You don't have to keep the report in sync with a stored proc. You don't have to worry about accidentally deploying one without the other.

If you run your report against multiple server, you need to have your stored proc deployed (and kept in sync) on all servers.

Seems like there are plenty of arguments both ways.

Thanks for this blog, keep sharing your thoughts like this...

Embedded Systems Training in Chennai

Embedded Training Institute in Coimbatore

Post a Comment