I have been getting a lot of questions regarding indexes over the past few weeks, so I thought I would make another post about indexes. In this post, I will be focusing on covering indexes. A covering index is an index that covers all the columns for a given query. A covering index can be created by either, adding the columns to the index key, or by adding the columns to the include clause of the create index statement. I will focus on the key differences between adding columns to the index key and adding columns to the INCLUDE clause. I will look at the differences in size, statistics, and the execution plan.

Let’s get started by creating our test table.

USE [tempdb]

GO

SET NOCOUNT ON;

GO

IF EXISTS(SELECT 1 FROM sys.tables WHERE NAME = 'TestData')

BEGIN

DROP TABLE dbo.[TestData];

END

GO

CREATE TABLE dbo.TestData(

RowNum INT PRIMARY KEY,

SomeId INT,

SomeCode INT

);

GO

INSERT INTO dbo.[TestData] (

[RowNum],

[SomeId],

[SomeCode]

)

SELECT TOP 1000000

ROW_NUMBER() OVER (ORDER BY t1.NAME) AS RowNum,

ABS(CHECKSUM(NEWID()))%2500+1 AS SomeId,

CASE

WHEN ROW_NUMBER() OVER (ORDER BY t1.NAME) % 2 = 0

THEN 1

ELSE 0

END AS SomeCode

FROM

Master.dbo.SysColumns t1,

Master.dbo.SysColumns t2

GO

Now that we have our test table, I will create a covering index using the INCLUDE clause.

CREATE INDEX ncl_idx_TestData_Cover1 ON dbo.TestData(SomeID) INCLUDE(SomeCode);

GO

I will issue a simple query to select data from the table, using the new index.

SELECT

someid,

somecode

FROM dbo.[TestData]

WHERE

someid = 500

AND somecode = 1

The results of this query are inconsequential for our purposes, so I will not post them here; however, I will post the key attributes of the execution plan.

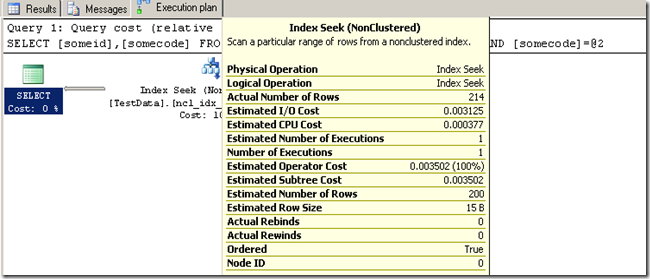

The above screenshot shows that our covering index is used. The primary thing to note here is deviation between the actual and the estimated number of rows. I will get into why these numbers deviate later in the post.

Let’s have a look at the space consumed by the index. I will issue a system stored procedure called sp_spaceused to obtain the space consumption details.

EXEC sp_spaceused 'dbo.Testdata'

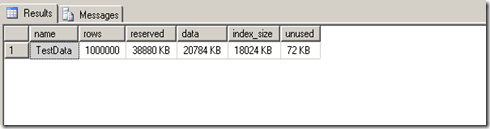

As you can see from the screenshot above, our index size is 18008 KB or 17.58 MB = 18008 / 1024. Now that we have our index size, let’s look at the statistics. I will be using the DBCC command show_statistics to display statistical information about our index.

DBCC show_statistics('dbo.Testdata','ncl_idx_TestData_Cover1')

The main thing to note here is that the column “SomeCode” is not available in the density vector. The density vector is located in the second result set produced by DBCC show_statistics. Essentially, density is the uniqueness of the column data. For example, the density of some id is .0004. The density calculation can be expressed as 1/(#Distinct Values). In the case of “SomeId” the density can be calculated as 1/2500= .0004. The “SomeId,RowNum” density is calculated as 1/1000000 = .000001. I know the number of distinct values is equal to the number of rows because “RowNum” is a unique column. If you want to calculate density programmatically, you can use a group by clause and a little creativity.

Note: you can use differing columns in the group by clause to get their respective density.

SELECT 1./SUM(cnt)

FROM (

SELECT 1 AS cnt

FROM dbo.testdata

GROUP BY someid,RowNum

) AS a

The optimizer uses density to estimate cardinality (estimated number of rows) when building a query plan. Obviously, the better the cardinality the better the query plan.

A benefit of the INCLUDE clause is the ability to supersede index limitations, such as the key column limit of 16, the index key size limitation (900 bytes), and even include columns with data types like VARCHAR(MAX), VARBINARY(MAX), TEXT, IMAGE etc..

Next, I will create the second covering index, which includes all columns in the index key. I will drop the original covering index, so I can accurately measure the index size.

DROP INDEX ncl_idx_TestData_Cover1 ON dbo.[TestData];

GO

----===== Covering index that includes both columns in the index key

CREATE INDEX ncl_idx_TestData_Cover2 ON dbo.TestData(SomeID,SomeCode)

GO

Now let’s run the same select query again.

SELECT

someid,

somecode

FROM dbo.[TestData]

WHERE

someid = 500

AND somecode = 1

As you can see the estimated number of rows deviates much less, from the actual, than the first covering index. The difference in cardinality estimates is pretty negligible for this sample, but in a real world scenario, the deviation can be much larger.

Let’s have a look at the space used.

Note: You can use the same code as before.

As shown above, the index size is larger, when columns are added to the index key. The new covering index is 18024 KB or 18024 / 1024 = 17.60 MB. This index is larger because the columns are stored at all levels of the index, whereas, the include clause stores the column values at the leaf level only. So why would you add columns to the index key? This answer brings us to the statistics maintained by the new index.

Note: You can use the same code as before but change the name of the index to ncl_idx_TestData_Cover2.

If you had not noticed from the screen shot above, SQL Server is maintaining the density of the “SomeCode” column in relation to the “SomeId” column, which was not the case for covering index 1. This is why the optimizer is able to more accurately estimate cardinality. In this case, the density value is represented as .0002. Adding columns to the index key allows the optimizer to make better cardinality estimates, which may translate into more optimal query plans.

So what is the verdict? My findings indicate that all covering indexes are not created equal. Both types of covering index has its own strengths and weaknesses. The type you choose will depend on your query and your business requirements. There are a lot of advantages to using both types of covering indexes. You will have to weigh performance against manageability and choose which is best for your environment.

References:

- Index with included columns: http://msdn.microsoft.com/en-us/library/ms190806.aspx

- Create Index: http://msdn.microsoft.com/en-us/library/ms188783.aspx

- DBCC Show_Statistics: http://msdn.microsoft.com/en-us/library/ms174384.aspx

- Sp_Spaceused: http://msdn.microsoft.com/en-us/library/ms188776.aspx

No comments:

Post a Comment